Data Analytics, The History

Summary

To understand current analytics offerings, strategy, and promises you have to understand its past. GenAI, Semantic Layer, Data Contracts, to even ETL had its origins starting in the 80s with multiple waves of the same issues we face today, and tomorrow. Let’s take a look back, and see where we will be investing in the future.

Analytics of the Past

1990s & 2000s - BI

Business Intelligence (BI) was one of the first data industry teams that focused on providing business value with tabular data. Data was processed in pre-cloud instances, and BI tools processed the data locally with closed sourced engines advertised as robust and performant, and directly impactful to businesses. Moreover, it promised the advancements of doing high-level analytics, telemetry, and insights. However, that failed.

You can see this in the late 1990s and 200s, where Business Objects, Oracle, SAP, and IBM Cognos dominated that BI landscape. These platforms were owned by IT departments, as it required their involvement. This ownership was an achilles heel to business analysts, quants, and business users waiting for weeks and months for new schema changes and new reports. Dashboards were clunky, expensive, slow, and depended on old ETL pipelines in on-premise data centers.

The role BI Engineer came to be. These specialists were someone who managed OLAP cubes, ETL pipelines, and administration configurations, a step away from Data Architects. However, these contributions too were more gatekeepers, and not enablers. It’s not their fault, as their job was to systems online and explore new datasets. Tools like Informatica came out, but was not enough to surface to business users.

Some other issues of this era were the following:

Cost of Licenses

BI teams siloed into COGs or Center of Excellence groups

Data Modeling followed star schema and slow changing dimensions, and ad-hoc SQL query sheets

Usage & Retention was low: Less than 30% of employees at large companies used BI dashboards

Metrics

Thoughts?

I don’t care what anyone says, this era was siloed, non-agile, monolithic, and unproductive compared to the vision…

But those experiences would experience cracks of light from new innovation.

2010s - Big Data

Data volume rapidly increased with the rise of web and mobile innovation, and thus the standard BI tools couldn’t handle the scale of wide and deeper datasets. “Data is the New Oil“ became a mantra in both tech and larger private & public sectors. Thankfully with the advent of open-sourced solutions, the industry received Apache Hadoop ecosystem in early 2010s. It provided advantages like storing massive amounts of semi-structured and unstructured data cheaply in distributed clusters, and query it later using MapReduce.

Open Source gave rise to solutions like":

Apache Hive (2008 via Facebook): Produced SQL-like abstraction over Hadoop to distribute data

Presto/Trino (2012 via Facebook): Brought faster, interactive querying to petabyte scale data

Storage became cheaper, there was a better low-latency query offering, and less bureaucracy occurred. These solutions would eventually become pivotal in this “Data Lake” era. Enterprise moved away from tightly structured data warehouses to flexible, schema-on-read approaches.

Still, we would have (new) issues like the following:

Query Latency could be minutes to hours

Which BI Tool? (Tableau, Power BI, Sisense, Jupyter Notebooks?)

Lack of data governance (e.g. authentication, administration, testing)

Shifting Ownership: Ownership passed from IT to Data Engineering groups..still not in analytics teams

Steep Onboarding Curve (learning HiveQL, Java UDFs, even Scala)

Brittle Orchestration (OSS solutions Airflow & Oozie 1.0 were hard to debug and work with)

And we would have roles have new responsibilities like “data swamp”, “data plumbers", “data wranglers“ and more. These individuals would become closer in community with things like Data Hackathons in SQL files and Jupyter Notebooks importing Pandas and SciKit-Learn.

It was exciting times, yet unclear on unified goal of how to build data stack and strategy.

Then, ushered in a new era…Modern Data Stack.

Analytics Today

Late 2010s - 2024 - Modern Data Stack

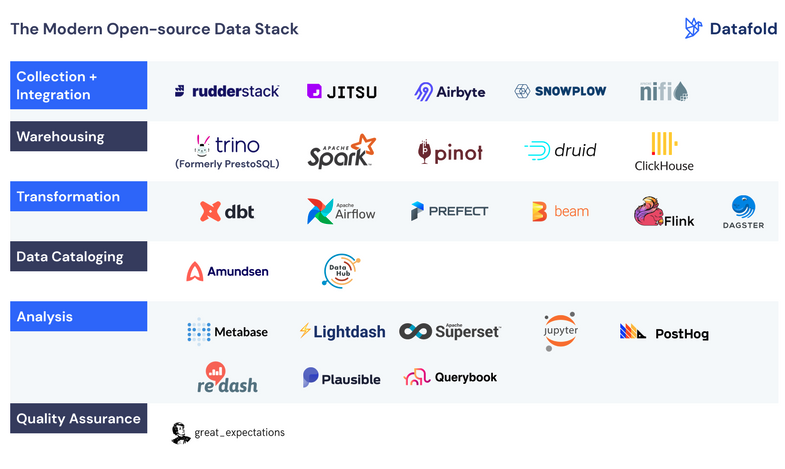

The Modern Data Stack (MDS) emerged to tackle the new painpoints that Hadoop/Redshift era had with solutions such as:

Snowflake (2014): Cloud Data storage and compute for elasticity, ease of use, and quick results

Google BigQuery (2011): Serverless on-demand analytics at scale that would compete with Snowflake and (soon) DataBricks. It was easy to use, had auto-scaling, and part of GSuite ecosystem

dbt (2016): brought software engineering principles (version controlm, testing, modularity) into SQL to produce data models. This ushered in new role group Analytics Engineers.

Fivetran Stich, Airbyte made ingestion simple and managed going from ETL to ELT

Looker, Mode, Sisense, Metabase, Sigma simplified visualization and self-serve analytics

This was an evolution of brittle open-source orchestration, query, and storage tools from the previous section. It was great because this new era changes usability, prioritization, and politics of analytics by enabling:

Analysts now queried terabytes in seconds, using ANSCII-SQL or other related standards (not HiveQL lol)

Modeling now owned by analytics team, and not IT or even Data Engineering

Semantic Layer, Data Models, Kimball Method, etc

Resources and Headcount change - Silos to division, embedded hubs, or hybrid teams

(This also was called Modern Data Analytics)

By 2023, 80% of enterprise workloads had either fully migrated or considering a hybrid cloud-first architecture. Moreover, there was interest in new methodologies like Reverse ETL, Operational Analytics, Metric Trees, to Medallion datasets.

2024 and the Future - VibEDA & GenAnalytics

What about the future? We are now learning that tools and resources like Snowflake, DataBricks, NVIDIA, LLMs, and Agents are going to take my job…maybe?

Recently attending Data Council 2025 in Oakland, CA we see that there are attempts of automating this job persona. However, it has it’s shortcomings.

Can GenAI intentionally quickly solve how to be intentional with data? Can it connect Business Goals (e.g. OKRS) with metrics. Or will insurmountable costs will keep the Data Analyst persona alive?

If you guess the hint at the end, pure GenAI is not the solution. But augmenting that into your workflow and becoming proficient within analytics workflow will make you successful in your future career. The future belongs to those who turn analytics into strategic leverage with newfound GenAnalytics and VibeEDA workflow.

Let’s explore how to not just keep your seat at the table…but lead it.